Phone

AI Breakthrough in Quantum Computing: AlphaQubit Boosts Error Correction Accuracy

In a significant leap toward practical quantum computing, Google DeepMind and Google Quantum AI have unveiled AlphaQubit. This artificial intelligence-based system sets a new benchmark for quantum error correction. Development, detailed in a study published in Nature, combines advanced machine learning techniques with quantum computing principles to address one of the field’s greatest challenges: noise and error.

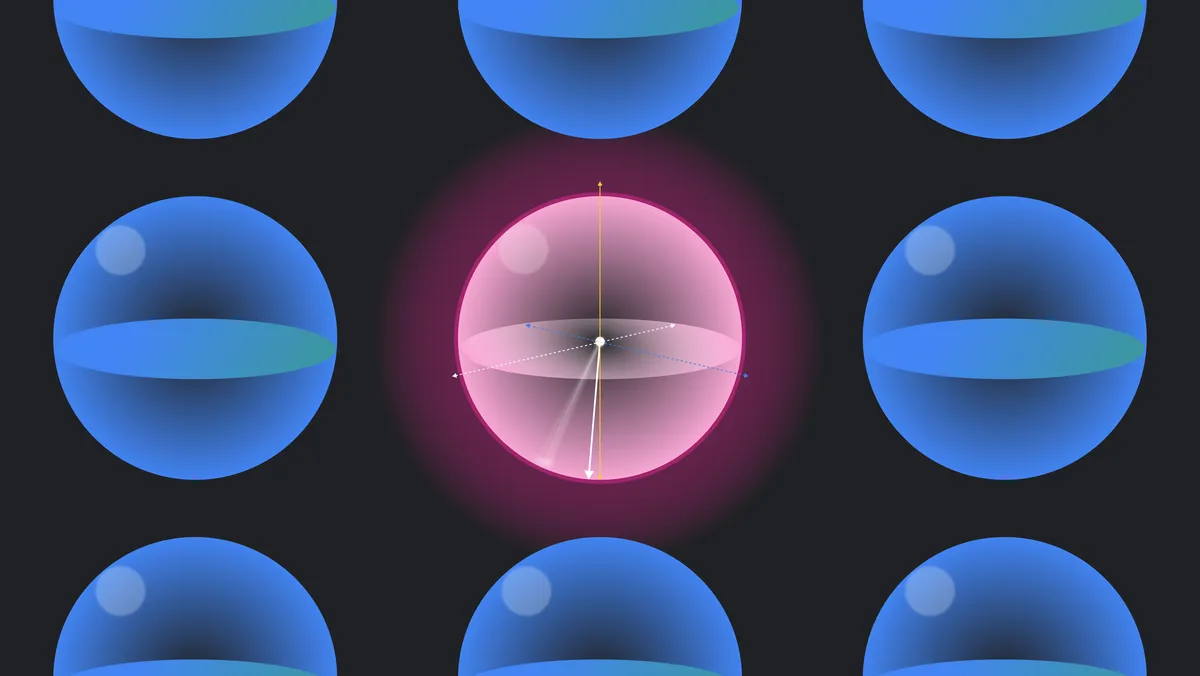

Quantum computers, hailed for their potential to revolutionize fields like drug discovery, material design, and fundamental physics, operate on qubits—quantum bits that leverage phenomena such as superposition and entanglement. Unlike classical binary bits, qubits exist in complex quantum states, enabling quantum computers to perform certain calculations exponentially faster than conventional machines. However, qubits are notoriously fragile and prone to disruption from environmental factors like heat, electromagnetic interference, and even cosmic rays.

Challenge of Error Correction

Errors in quantum computations can derail entire processes, making error correction essential for scalability and reliability. Current methods group multiple qubits into “logical qubits,” regularly performing consistency checks to identify and correct errors. However, as the number of qubits increases, decoding errors become computationally demanding.

AlphaQubit, which leverages transformer architecture—also used in large language models—represents a breakthrough. It uses neural networks to interpret consistency checks from logical qubits and predict errors with unprecedented accuracy.

Advancing Error Decoding

AlphaQubit was trained using data from Google’s Sycamore quantum processor, a state-of-the-art system featuring up to 49 qubits. To refine its performance, researchers simulated millions of quantum error scenarios before fine-tuning the model with experimental data.

The results were groundbreaking. In tests on the Sycamore platform, AlphaQubit reduced errors by 6% compared to tensor network methods and by 30% compared to correlated matching—a leading scalable decoder. Se gains, particularly at larger scales, represent a major step forward in quantum error correction.

Scaling for Future

Looking ahead, AlphaQubit’s developers explored its potential on simulated systems with up to 241 qubits, well beyond current hardware capabilities. Even at this scale, it outperformed competing decoders, demonstrating adaptability and scalability for future quantum systems. Additionally, AlphaQubit maintained strong performance in experiments involving extensive rounds of error correction, indicating its robustness in long-term computations.

Despite its success, AlphaQubit faces hurdles in real-time application. Quantum processors operate at incredible speeds—conducting millions of consistency checks per second—posing challenges for even the most advanced AI models. Researchers aim to enhance AlphaQubit’s speed and explore more efficient training methods to keep pace with quantum hardware advancements.

Toward Practical Quantum Computing

AlphaQubit’s success marks a crucial milestone in the journey toward reliable, large-scale quantum computing. While challenges remain, the integration of AI and quantum error correction brings humanity closer to unlocking the full potential of quantum computers. Se machines, once refined, could tackle problems previously deemed unsolvable, heralding a new era of scientific discovery and technological innovation.

As research continues, collaborations between machine learning and quantum computing promise to shape the future of this transformative technology.